Abstract: Understanding the need to crawl notorious sites of

the World Wide Web for Leaked/Compromised/Hacked data and to place a mechanism

in place to report such findings so that the necessary action may be taken at a

quicker pace to minimize the impact of the attack.

Why?

As we know in today’s world no

amount of security can assure a system impenetrable, the least we can do is

step up our guard and place a mechanism in place that minimizes damage in case

of a worst case scenario.

Hackers have perfected few techniques

to exploit money from their plunders of hacked data.

Hacked Data may contain email credentials,

credentials of social networks, API keys, Subnet IPs, Password hashes,

Machine configuration info etc. They sell the data to the victim’s rivals/competitors

or in certain cases they end up blackmailing the victim.

Hackers /cyber criminals tend to

share the results of their data heist on the open web on sites such as pastebin,

slexy, reddit, 4chan and many other loosely moderated sites. They often

share glimpses of the hacked data in order to gain attention and to pull up

some interested buyers for their entire data dump.

This makes it evident that we need to be on the constant lookout for such data leaks in various forums, text sharing sites, social media etc. Since the data to be monitored is large it would be impractical to do it manually, hence we need a system/application in place to do the same. Once the data that is leaked comes through to us, it is upto the security team to take the necessary action which may be anything from changing the passwords/api keys or suspending the accounts etc or whatever action is apt for the situation.

Scope

To define a monitoring system which identifies

data leaks of a specific Individual/company along with plausible data sources

and tools which generate reports. The action to be taken on the data leak

completely depends on the type of the system/data which is not in the scope of

this

Everyone Else is doing it?

Yes! A lot of the big companies do have a system

in place for the sole purpose of looking for data leaks of their respective

companies on the open web. Ever since the infamous hack “50 days of Lulz”

everyone is rushing towards this approach. Cyber Security related companies

constantly do this.

Data Source 1: As you can see in the above diagram, the data from text sharing sites are pulled up for analysis via their API and using regular expressions in our Pattern matching engine we shall pull up any leaked data.

Data Source 2: There are few twitter bots out there such as @dumpmon which monitor hacker’s

playgrounds, forums and their popular sharing platforms and tweet in case of

any leaks detected.

Data from such bots can be useful

as it provides a defined amount of data to search, passing it to our PR-Engine will

do the rest of filtering.

Data Source 3: Using custom search engine searches and using tools

such as scumblr and integrating it with our system would help us get the leaked

data at a quicker rate.

The key thing to be considered here is how quick we can get the data that interests us and make sure it is attained with minimum resource consumed.

Tools: There are no fully fledged commercial tools for this

purpose. On exploring I found a few good tools.

Scrumblr & Sketchy: This is a tool developed and open

sourced by Netflix. The purpose of the tool is to collect information on the

web that interests you/ your company. This tool is currently being used by

Netflix Security team.

HaveIBeenPwned: This is a online tool where you can search for

a keyword it shows you if your account is compromised. It has API support too.

Amazon also monitors the web; there have been multiple

instances where users are alerted that their API keys of their instances are on

the open web. We are not aware which tool they use for this purpose.

However there is an open source

tool called Security Monkey which

monitors policy changes and alerts on insecure configurations in an AWS

account.

Pystemon

I happened to try out pystemon which is an open sourced tool

built using python.

Below are the results.

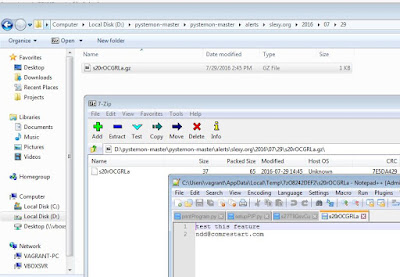

Step 1: I posted a test email Id with some data to the text sharing

site called slexy.

Step 2: I configured my system to be able to run pystemon.

Step 3: I set up the regular expression I was looking for in the

tool configuration.

Step 4: Run the program

Step 5: Within a minute, I managed to find the text which I had shared

in step 1 downloaded along with all the information surrounding it into the

Alerts folder.

This is just a simple

demonstration on how humongous data can be mined easily with the tools

available, on customizing such

tools we can set the path to effective monitoring of the web for confidential

data leaks. The thing common in all tools is that they have used python. Python is usually used to scrape data from

large dumps and it is effective in doing so.

Conclusion: Using the information in this document as a precursor

and setting up an effective system or an application consisting of multiple

inbound data sources, to monitor the wide web and minimize the impact on the

customers/victims thereby adding more Trust towards the brand which would not

only be essential but pivotal in today’s world where security can be an

illusion.

References:

No comments:

Post a Comment